How do Westlaw, Lexis Nexis and local tools make their GenAI-powered search chatbots accurate?

Legal professionals putting ChatGPT to the test by submitting legal questions will quickly be discouraged and play down its potential and value in answering nuanced or detailed questions.

Indeed, while Large Language Models (LLMs) exhibit remarkable capabilities with the data on which they have been trained, they will lack in-depth knowledge beyond their training content.

Incorporating detailed legal knowledge – let alone your private data – by training a new model is technical and financial madness.

This limitation is addressed by applying a Retrieval-augmented generation (RAG) method.

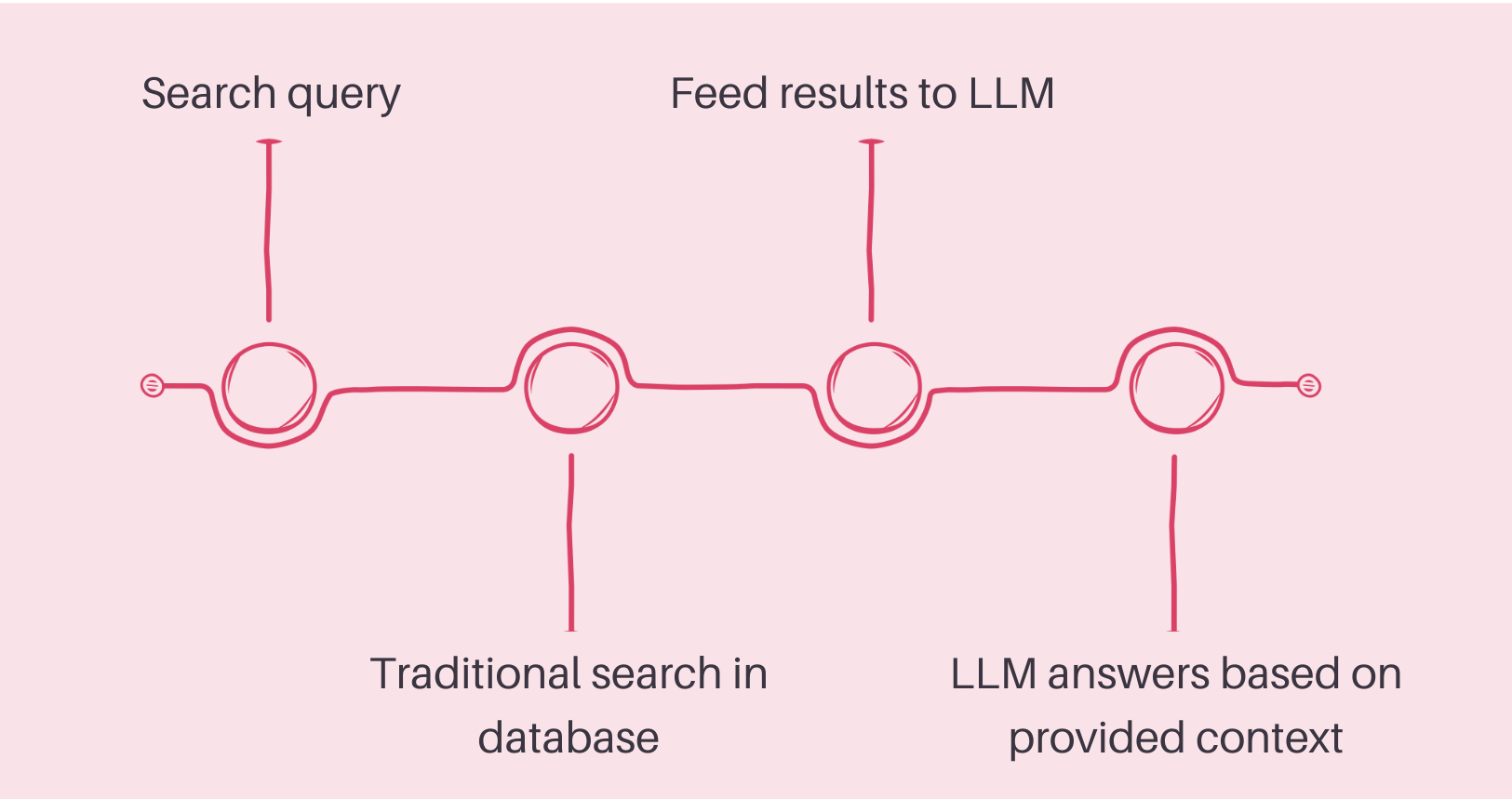

Systems integrating RAG, will:

1. first, search the database at hand;

2. retrieve relevant results (e.g. using traditional search methods);

3. and feed those results to the AI engine as context.

Can this method also benefit you and your internal data?

The effectiveness and reliability of these tools are heavily dependent on their access to current and comprehensive databases.

Without up-to-date legal information, the outputs of RAG and Generative AI systems risk being inaccurate, outdated or irrelevant.

So before plugging it into an LLM, optimize your databases.

How does RAG compare to the conventional way of using LLMs?

RAG offers numerous benefits compared to the conventional way of using Large Language Models (LLMs), including:

🔍 Enhanced precision:

Responses produced following this method tend to be more accurate. This improvement in accuracy is attributable to RAG's ability to consult a broader array of information beyond the LLM’s training data.

💭 Less hallucinations:

This method demonstrates a lower propensity for fabricating or producing incorrect information. The access to relevant data from retrieved documents helps mitigate the generation of hallucinations.

📈 Guaranteed relevance:

RAG is capable of delivering more responses than those from LLMs. Its capability to access and utilize continuously updated documents ensures that the information provided is more relevant and up-to-date.

What are Ask Q's recommendations?

-

Inquire about GenAI search capabilities on the commercial legal knowledge bases you subscribe to.

-

Ensure your internal databases are ready to get the most out of Generative AI functionalities.

-

If you draft contracts regularly, leverage tools such as Henchman and Clausebase.